Everything curl

Everything curl is an extensive guide for all things curl. The project itself, the command-line tool, the library, how everything started and how it came to be the useful tool it is today. It explains how we work on developing it further, what it takes to use it, how you can contribute with code or bug reports and why millions of existing users use it.

This book is meant to be interesting and useful to both casual readers and somewhat more experienced developers. It offers something for everyone to pick and choose from.

Do not read this book from front to back. Read the chapters or content you are curious about and flip back and forth as you see fit.

This book is an open source project in itself: open, completely free to download and read. Free for anyone to comment on, and available for everyone to contribute to and help out with. Send your bug reports, ideas, pull requests or critiques to us and I or someone else will work on improving the book accordingly.

This book will never be finished. I intend to keep working on it. While I may at some point consider it fairly complete, covering most aspects of the project (even if only that seems like an insurmountable goal), the curl project will continue to move so there will always be things to update in the book as well.

This book project started at the end of September 2015.

Site

https://everything.curl.dev is the home of this book. It features the book online in a web version.

This book is also provided as a PDF and an ePUB.

The book website is hosted by Fastly. The book contents is rendered by mdBook since March 18th, 2024.

Content

All book content is hosted on GitHub in the https://github.com/curl/everything-curl repository.

Author

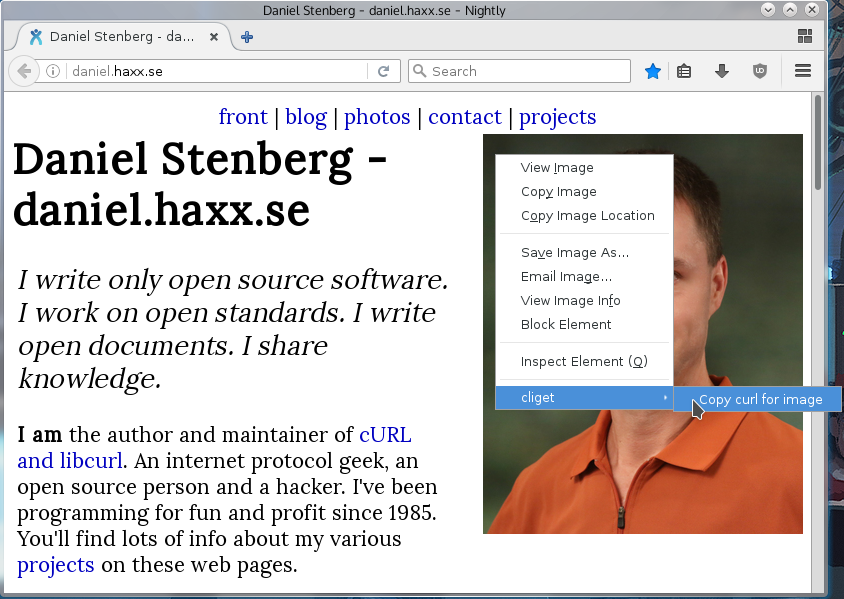

With the hope of becoming just a co-author of this material, I am Daniel Stenberg. I founded the curl project and I am a developer at heart—for fun and profit. I live and work in Stockholm, Sweden.

All there is to know about Daniel can be found on daniel.haxx.se.

Contribute

If you find mistakes, omissions, errors or blatant lies in this document, please send us a refreshed version of the affected paragraph and we will amend and update. We give credit to and recognize everyone who helps out.

Preferably, you could submit errors or pull requests on the book's GitHub page.

Contributors

Lots of people have reported bugs, improved sections or otherwise helped make this book the success it is. These friends include the following:

AaronChen0 on github, alawvt on github, Amin Khoshnood, amnkh on github, Anders Roxell, Angad Gill, Aris (Karim) Merchant, auktis on github, Ben Bodenmiller Ben Peachey, bookofportals on github, Bruno Baguette, Carlton Gibson, Chris DeLuca, Citizen Esosa, Dan Fandrich, Daniel Brown, Daniel Sabsay, David Piano, DrDoom74 at GitHub, Emil Hessman, enachos71 on github, ethomag on github, Fabian Keil, faterer on github, Frank Dana, Frank Hassanabad, Gautham B A, Geir Hauge, Harry Wright, Helena Udd, Hubert Lin, i-ky on github, infinnovation-dev on GitHub, Jay Ottinger, Jay Satiro, Jeroen Ooms, Johan Wigert, John Simpson, JohnCoconut on github, Jonas Forsberg, Josh Vanderhook, JoyIfBam5, KJM on github, knorr3 on github, lowttl on github, Luca Niccoli, Manuel on github, Marius Žilėnas, Mark Koester, Martin van den Nieuwelaar, mehandes on github, Michael Kaufmann, Ms2ger, Mohammadreza Hendiani, Nick Travers, Nicolas Brassard, Oscar on github, Oskar Köök, Patrik Lundin, RekGRpth on github, Ryan McQuen, Saravanan Musuwathi Kesavan, Senthil Kumaran, Shusen Liu, Sonia Hamilton, Spiros Georgaras, Stephen, Steve Holme, Stian Hvatum, strupo on github, Viktor Szakats, Vitaliy T, Wayne Lai, Wieland Hoffmann, 谭九鼎

License

This document is licensed under the Creative Commons Attribution 4.0 license.

How to read

Here is an overview of the main sections of this book and what they cover.

1. The cURL project

How the project started, how we work and how often releases are made and more.

2. Network and protocols

What exactly are networks and protocols?

3. Install curl and libcurl

How and where to get and install curl.

4. Source code

A description of the curl source tree and how the layout of the code is and works.

5. Build curl

How to build curl and libcurl from source.

6. Command line concepts

Start at the beginning. How do you use curl from a command line?

7. Command line transfers

Going deeper, looking at how to do and control Internet transfers with the curl command line tool.

8. Command line HTTP

Digging deeper on the HTTP specific actions to do with the curl command line tool.

9. Command line FTP

Learn how to do FTP specific operations with curl in this chapter.

10. libcurl

How libcurl works and how you use it when writing your own applications with it. The fundamentals.

11. libcurl transfers

How to setup and control libcurl to do Internet transfers using the API.

12. libcurl HTTP

A closer look at doing and controlling HTTP specific transfers with libcurl.

13. libcurl helpers

libcurl provides a set of additional APIs, helpers, that go a little beyond just transfers. These are APIs and subsystems that can make your libcurl using application excel. Manage URLs, extract HTTP headers and more.

14. libcurl examples

Stand-alone libcurl using examples showing off how easy it is to write a first simple application.

15. libcurl bindings

An overview of popular libcurl bindings and how similar they are to the libcurl C API.

16. libcurl internals

Under the hood it works like this…

17. Index

The index.

The cURL project

A funny detail about Open Source projects is that they are called projects, as if they were somehow limited in time or ever can get done. The cURL project is a number of loosely coupled individual volunteers working on writing software together with a common mission: to do reliable data transfers with Internet protocols, as Open Source.

- How it started

- The name

- What does curl do?

- Project communication

- Mailing list etiquette

- Mailing lists

- Reporting bugs

- Commercial support

- Releases

- Security

- Trust

- Code of Conduct

- Development

- The development team

- Users of curl

- Future

How it started

Back in 1996, Daniel Stenberg was writing an IRC bot in his spare time, an automated program that would offer services for the participants in a chatroom dedicated to the Amiga computer (#amiga on the IRC network EFnet). He came to think that it would be fun to get some updated currency rates and have his bot offer a service online for the chat room users to get current exchange rates, to ask the bot "please exchange 200 USD into SEK" or similar.

In order to have the provided exchange rates as accurate as possible, the bot would download the rates daily from a website that was hosting them. A small tool to download data over HTTP was needed for this task. A quick look-around at the time had Daniel find a tiny tool named httpget (written by the Brazilian developer Rafael Sagula). It did the job, almost, just needed a few little tweaks here and there.

Rafael released HttpGet 0.1 on November 11, 1996 and already in the next release, called 0.2 released in December that year, Daniel had his first changes included. Soon after that, Daniel had taken over maintenance of the few hundred lines of code it was.

HttpGet 1.0 was released on April 8 1997 with brand new HTTP proxy support.

We soon found and fixed support for getting currencies over GOPHER. Once FTP download support was added, the name of the project was changed and urlget 2.0 was released in August 1997. The HTTP-only days were already past.

The project slowly grew bigger. When upload capabilities were added and the name once again was misleading, a second name change was made and on March 20, 1998 curl 4 was released. (The version numbering from the previous names was kept.)

We consider March 20 1998 to be curl's birthday.

The name

Naming things is hard.

The tool was about uploading and downloading data specified with a URL. It was a client-side program (the 'c'), a URL client, and would show the data (by default). 'c' stands for Client and URL: cURL. The fact that it could also be read as see URL helped.

Nothing more was needed so the name was selected and we never looked back again.

Later on, someone suggested that curl could actually be a clever recursive acronym (where the first letter in the acronym refers back to the same word): Curl URL Request Library.

While that is awesome, it was actually not the original thought. We wish we were that clever…

There are and were other projects using the name curl in various ways, but we were not aware of them by the time our curl came to be.

Uppercase or lowercase

The project can be spelled with mixed case like cURL, even if we also often

just write it in all lowercase: curl. You are free to use the version you

like. We tend to write about the command line tool and the library with all

lowercase: curl and libcurl.

Pronunciation

Most of us pronounce curl with an initial k sound, just like the English word curl. It rhymes with words like girl and earl. Merriam Webster has a short WAV file to help.

Confusions and mix-ups

Soon after our curl was created another curl appeared that created a programming language. That curl still exists.

Several libcurl bindings for various programming languages use the term curl or CURL in part or completely to describe their bindings. Sometimes you find users talking about curl but referring to neither the command-line tool nor the library that is made by this project.

As a verb

'To curl something' is sometimes used as a reference to use a non-browser tool to download a file or resource from a URL.

What does curl do?

cURL is a project and its primary purpose and focus is to make Internet transfer related Open Source products:

-

curl, the command-line tool

-

libcurl the transfer library with a C API

-

trurl, the URL parser and manipulation tool

-

wcurl, the simple downloader tool

Everything and anything that is related to client-side Internet protocol transfers can be considered curl's business. Things that are not related to that should be avoided and be left for other projects and products.

It could be important to also consider that curl and libcurl try to avoid handling the actual data that is transferred. It has, for example, no knowledge about HTML or anything else of the content that is popular to transfer over HTTP, but it knows all about how to transfer such data over HTTP.

curl products are frequently used not only to drive thousands or millions of scripts and applications for an Internet connected world, but they are also widely used for server testing, protocol fiddling and trying out new things.

curl, the command line tool

Running curl from the command line was natural and Daniel never considered anything else than that it would output data on stdout, to the terminal, by default. The "everything is a pipe" mantra of standard Unix philosophy was something Daniel believed in. curl is like 'cat' or one of the other Unix tools; it sends data to stdout to make it easy to chain together with other tools to do what you want. That is also why virtually all curl options that allow reading from a file or writing to a file, also have the ability to select doing it to stdout or from stdin.

Following the Unix style of how command-line tools work, there was also never any question about whether curl should support multiple URLs on the command line.

The command-line tool is designed to work perfectly from scripts or other automatic means. It does not feature any other GUI or UI other than mere text in and text out.

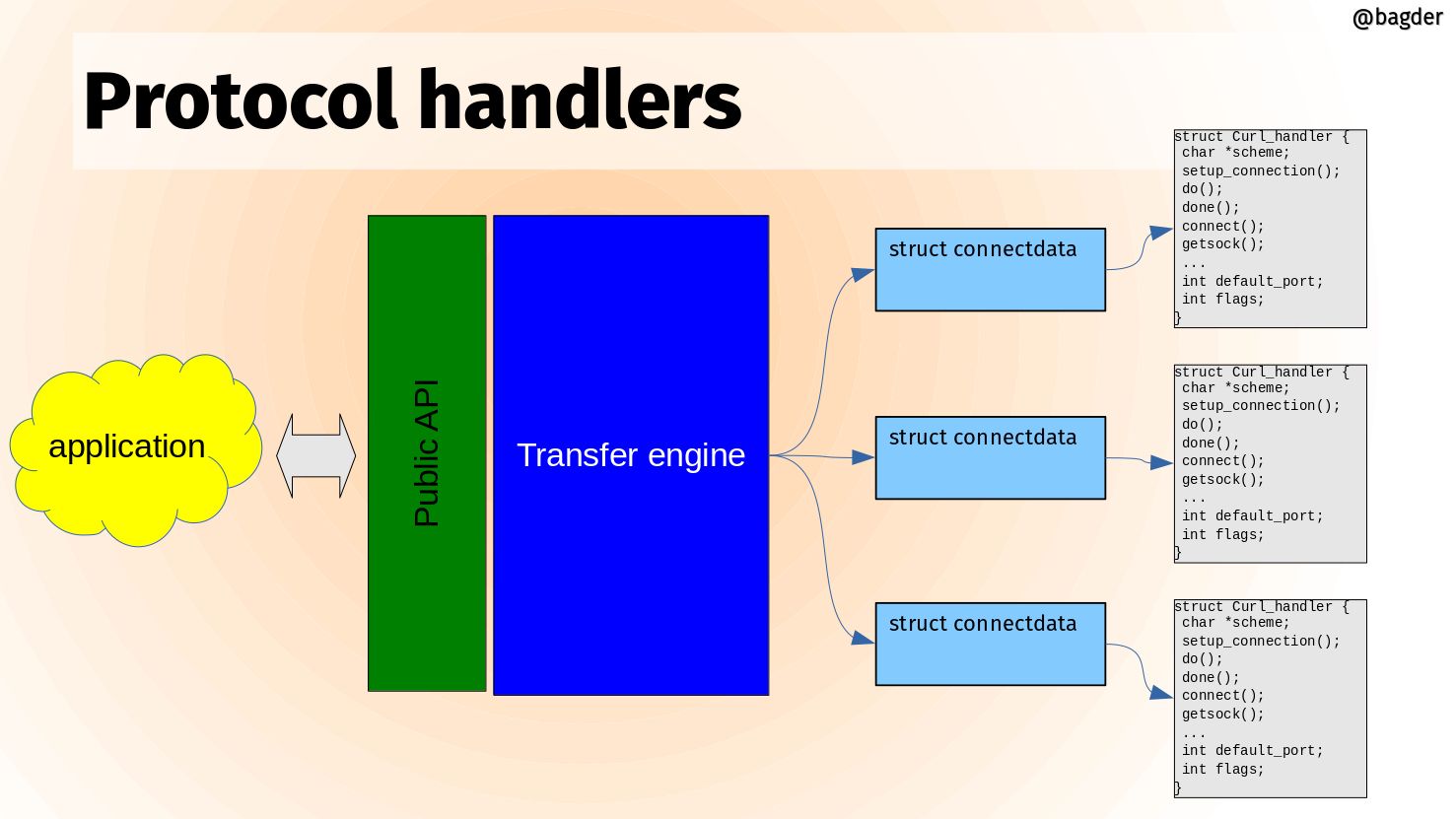

libcurl, the library

While the command-line tool came first, the network engine was ripped out and converted into a library during the year 2000 and the concepts we still have today were introduced with libcurl 7.1 in August 2000. Since then, the command line tool has been a thin layer of logic to make a tool around the library that does all the heavy lifting.

libcurl is designed and meant to be available for anyone who wants to add client-side file transfer capabilities to their software, on any platform, any architecture and for any purpose. libcurl is also extremely liberally licensed to avoid that becoming an obstacle.

libcurl is written in traditional and conservative C. Where other languages are preferred, people have created libcurl bindings for them.

The library is used in every imaginable sort of embedded device where Internet transfers are needed: car infotainment, televisions, Blu-Ray players, set-top boxes, printers, routers, game systems, etc.

trurl, a URL tool

Managing, parsing and handling URLs is tricky. Not only because URLs are insufficiently standardized so that different parsers tend to parse the same URL differently, but also simply because the syntax of them are hard to get right.

trurl is a command line tool to help users parse, generate and manipulate URLs in scripts and on command lines. By using trurl, scripts can get extract details from URLs safely, update URLs or create URLs without having to figure out the intricacies of the URL standards.

An extra reason for using trurl in combination with curl, is that trurl is built using libcurl, meaning that both tools use the same URL parser and therefore understand and treat URLs exactly the same way. This is important because using mixed URL parsers on the same URL is a common reason for security vulnerabilities.

wcurl, a URL downloader

For many years it has been said and people have reported that using curl to download URLs is difficult because they can never remember the exact command line options to make curl do just that: download a single URL or two into a local file. People would cite that as the primary reason they still use alternative command line tools for that use case.

wcurl is the command line tool that simply downloads URLs without the user having to remember any parameter at all.

Project communication

cURL is an Open Source project consisting of voluntary members from all over the world, living and working in a large number of the world's time zones. To make such a setup actually work, communication and openness is key. We keep all communication public and we use open communication channels. Most discussions are held on mailing lists, we use bug trackers where all issues are discussed and handled with full insight for everyone who cares to look.

It is important to realize that we are all jointly taking care of the project, we fix problems and we add features. Sometimes a regular contributor grows bored and fades away, sometimes a new eager contributor steps out from the shadows and starts helping out more. To keep this ship going forward as well as possible, it is important that we maintain open discussions and that is one of the reasons why we frown upon users who take discussions privately or try to email individual team members about development issues, questions, debugging or whatever.

In this day, mailing lists may be considered the old style of communication — no fancy web forums or similar. Using a mailing list is therefore becoming an art that is not practiced everywhere and may be a bit strange and unusual to you. It is just about sending emails to an address that then sends that email out to all the subscribers. Our mailing lists have at most a few thousand subscribers. If you are mailing for the first time, it might be good to read a few old mails first to get to learn the culture and what's considered good practice.

The mailing lists and the bug tracker have changed hosting providers a few times and there are reasons to suspect it might happen again in the future. It is just the kind of thing that happens to a project that lives for a long time.

A few users also hang out on IRC in the #curl channel on libera.chat.

Mailing list etiquette

Like many communities and subcultures, we have developed guidelines and rules of what we think is the right way to behave and how to communicate on the mailing lists. The curl mailing list etiquette follows the style of traditional Open Source projects.

Do not mail a single individual

Many people send one question directly to one person. One person gets many mails, and there is only one person who can give you a reply. The question may be something that other people also want to ask. These other people have no way to read the reply but to ask the one person the question. The one person consequently gets overloaded with mail.

If you really want to contact an individual and perhaps pay for his or her services, by all means go ahead, but if it is just another curl question, take it to a suitable list instead.

Reply or new mail

Please do not reply to an existing message as a shortcut to post a message to the lists.

Many mail programs and web archivers use information within mails to keep them together as threads, as collections of posts that discuss a certain subject. If you do not intend to reply on the same or similar subject, do not just hit reply on an existing mail and change the subject; create a new mail.

Reply to the list

When replying to a message from the list, make sure that you do group reply or reply to all, and not just reply to the author of the single mail you reply to.

We are actively discouraging replying back to just a single person privately. Keep follow-ups on discussions on the list.

Use a sensible subject

Please use a subject of the mail that makes sense and that is related to the contents of your mail. It makes it a lot easier to find your mail afterwards and it makes it easier to track mail threads and topics.

Do not top-post

If you reply to a message, do not use top-posting. Top-posting is when you write the new text at the top of a mail and you insert the previous quoted mail conversation below. It forces users to read the mail in a backwards order to properly understand it.

This is why top posting is so bad:

A: Because it messes up the order in which people normally read text.

Q: Why is top-posting such a bad thing?

A: Top-posting.

Q: What is the most annoying thing in email?

Apart from the screwed-up read order (especially when mixed together in a thread when someone responds using the mandated bottom-posting style), it also makes it impossible to quote only parts of the original mail.

When you reply to a mail you let the mail client insert the previous mail quoted. Then you put the cursor on the first line of the mail and you move down through the mail, deleting all parts of the quotes that do not add context for your comments. When you want to add a comment you do so, inline, right after the quotes that relate to your comment. Then you continue downwards again.

When most of the quotes have been removed and you have added your own words, you are done.

HTML is not for mails

Please switch off those HTML encoded messages. You can mail all those funny mails to your friends. We speak plain text mails.

Quoting

Quote as little as possible. Just enough to provide the context you cannot leave out. A lengthy description can be found here.

Digest

We allow subscribers to subscribe to the digest version of the mailing lists. A digest is a collection of mails lumped together in one single mail.

Should you decide to reply to a mail sent out as a digest, there are two things you MUST consider if you really cannot subscribe normally instead:

Cut off all mails and chatter that is not related to the mail you want to reply to.

Change the subject name to something sensible and related to the subject, preferably even the actual subject of the single mail you wanted to reply to.

Please tell us how you solved the problem

Many people mail questions to the list, people spend some of their time and make an effort in providing good answers to these questions.

If you are the one who asks, please consider responding once more in case one of the hints was what solved your problems. Those who write answers feel good to know that they provided a good answer and that you fixed the problem. Far too often, the person who asked the question is never heard of again, and we never get to know if he/she is gone because the problem was solved or perhaps because the problem was unsolvable.

Getting the solution posted also helps other users that experience the same problem(s). They get to see (possibly in the web archives) that the suggested fixes actually helped at least one person.

Mailing lists

Some of the most important mailing lists are…

curl-users

The main mailing list for users and developers of the curl command-line tool, for questions and help around curl concepts, command-line options, the protocols curl can speak or even related tools. We tend to move development issues or more advanced bug fixes discussions over to curl-library instead, since libcurl is the engine that drives most of curl.

See curl-users

curl-library

The main development list, and also for users of libcurl. We discuss how to use libcurl in applications as well as development of libcurl itself. Questions on libcurl behavior, debugging and documentation issues etc.

See curl-library

curl-announce

This mailing list only gets announcements about new releases and security problems—nothing else. This one is for those who want a more casual feed of information from the project.

See curl-announce

Reporting bugs

The development team does a lot of testing. We have a whole test suite that is run frequently every day on numerous platforms in order to exercise all code and make sure everything works as expected.

Still, there are times when things do not work the way they should, and we depend on people reporting it to us.

A bug is a problem

Any problem can be considered a bug. A weirdly phrased wording in the manual that prevents you from understanding something is a bug. A surprising side effect of combining multiple options can be a bug—or perhaps it should be better documented? Perhaps the option does not do at all what you expected it to? That is a problem and we should fix it.

Problems must be known to get fixed

This may sound easy and uncomplicated but is a fundamental truth in our and other projects. Just because it is an old project and has thousands of users does not mean the development team knows about the problem you just stumbled into. Maybe users have not paid attention to details as much as you have, or perhaps it just never triggered for anyone else.

We rely on users experiencing problems to report them. We need to know of their existence in order to fix them.

Fixing the problems

Software engineering is, to a large degree, about fixing problems. To fix a problem a developer needs to understand how to repeat it, and to do that they need to be told what set of circumstances triggered the problem.

A good bug report

A good report explains what happened and what you thought was going to happen. Tell us exactly what versions of the different components you used and take us step by step through what you did to arrive at the problem.

After you submit a bug report, you can expect there to be follow-up questions or perhaps requests that you try out various things so the developer can narrow down the suspects and make sure your problem is properly located.

A bug report that is submitted then abandoned by the submitter risks getting closed if the developer fails to understand it, fails to reproduce it or faces other problems when working on it. Do not abandon your report.

Report curl bugs in the curl bug tracker on GitHub.

Testing

Testing software thoroughly and properly is a lot of work. Testing software that runs on dozens of operating systems and CPU architectures, with server implementations which have their own sets of bugs and interpretations of the specs, is even more work.

The curl project has a test suite that iterates over all existing test cases, runs each test and verifies that the outcome is correct and that no other problem happened, such as a memory leak or something fishy in the protocol layer.

The test suite is meant to be run after you have built curl yourself. There are a number of volunteers who also help out by running the test suite automatically a few times per day to make sure the latest commits are tested. This way we discover the worst flaws not long after their introduction.

We do not test everything, and even when we try to test things there are always subtle bugs that get through, some that are only discovered years later.

Due to the nature of different systems and funny use cases on the Internet, eventually some of the best testing is done by users when they run the code to perform their own use cases.

Another limiting factor with the test suite is that the test setup itself is less portable than curl and libcurl, so there are in fact platforms where curl runs fine but the test suite cannot execute at all.

Commercial support

Commercial support for curl and libcurl is offered and provided by Daniel Stenberg (the curl founder) through the company wolfSSL.

wolfSSL offers world-wide commercial support on curl done by the masters of curl. wolfSSL handles curl customization, ports to new operating systems, feature development, patch maintenance, bug fixing, upstreaming, training, code reviews of libcurl API use, security scanning your curl use and more - with several different support options from basic up to full 24/7 support. With guaranteed response times.

See the support page for contact details.

Releases

A release in the curl project means packaging up all the source code that is in the master branch of the code repository, signing the package, tagging the point in the code repository, and then putting it up on the website for the world to download.

It is one single source code archive for all platforms curl can run on. It is the one and only package for both curl and libcurl.

We never ship any curl or libcurl binaries from the project with one exception: we host official curl binaries built for Windows users. All the other packaged binaries that are provided with operating systems or on other download sites are done by gracious volunteers outside of the project.

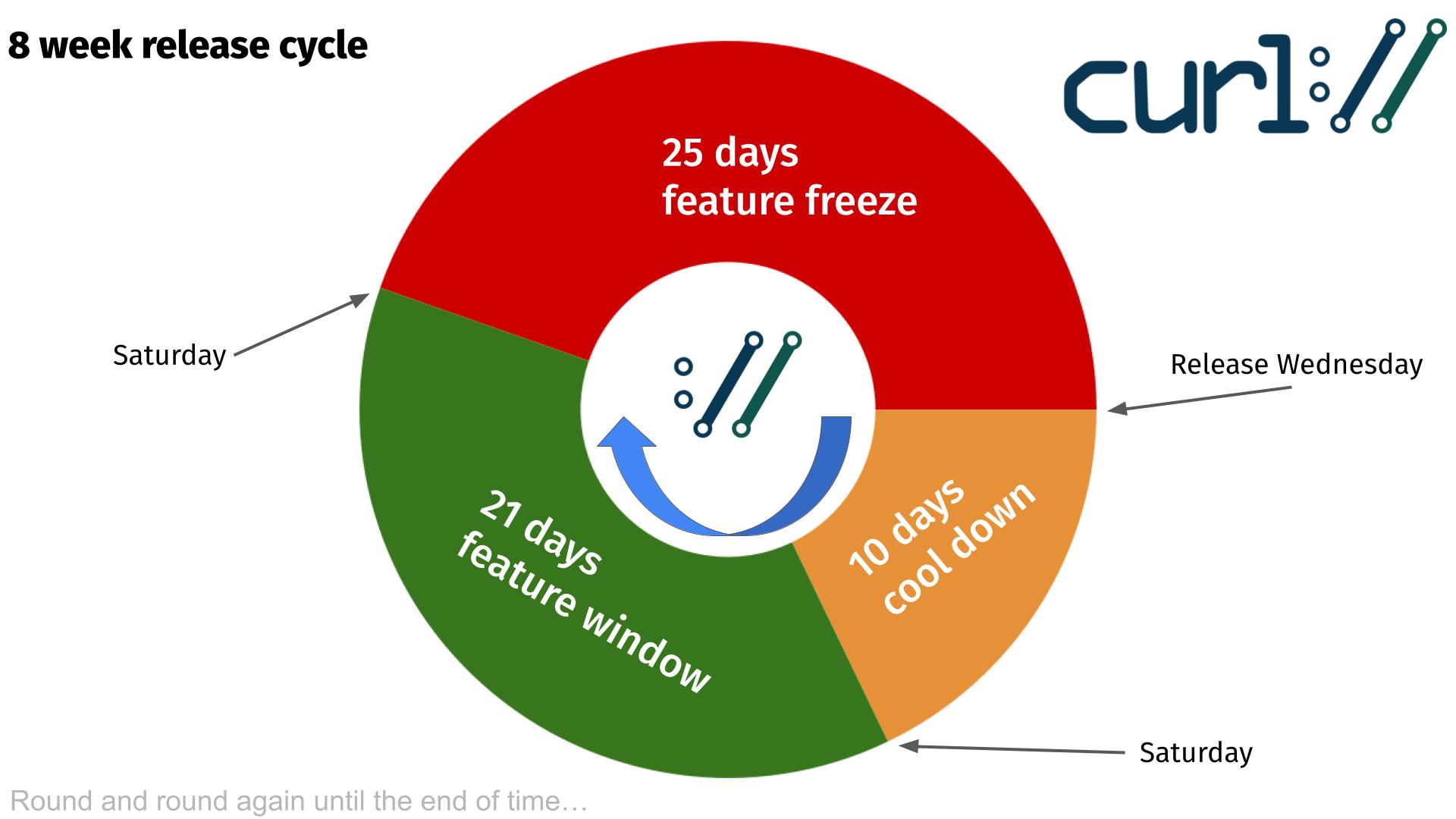

As of several years back, we make an effort to do our releases on an eight week cycle and unless some really serious and urgent problem shows up we stick to this schedule. We release on a Wednesday, and then again a Wednesday eight weeks later and so it continues. Non-stop.

For every release we tag the source code in the repository with the curl version number and we update the changelog.

We had done a total of 265 releases by February 2025. The entire release history and changelog is available in our curl release log.

Release cycle

Daily snapshots

Every single change to the source code is committed and pushed to the source code repository. This repository is hosted on github.com and is using git these days (but has not always been this way). When building curl off the repository, there are a few things you need to generate and set up that sometimes cause people some problems or just friction. To help with that, we provide daily snapshots.

The daily snapshots are generated daily (clever naming, right?) as if a release had been made at that point. It produces a package of all source code and all files that are normally part of a release and puts it in a package and uploads it to this special place to allow interested people to get the latest code to test, to experiment or whatever.

The snapshots are kept for around 20 days until deleted.

Security

Security is a primary concern for us in the curl project. We take it seriously and we work hard on providing secure and safe implementations of all protocols and related code. As soon as we get knowledge about a security related problem or just a suspected problem, we deal with it and we attempt to provide a fix and security notice no later than in the next pending release.

We use a responsible disclosure policy, meaning that we prefer to discuss and work on security fixes out of the public eye and we alert the vendors on the openwall.org list a few days before we announce the problem and fix to the world. This, in an attempt to shorten the time span the bad guys can take advantage of a problem until a fixed version has been deployed.

Past security problems

During the years we have had our fair share of security related problems. We work hard on documenting every problem thoroughly with all details listed and clearly stated to aid users. Users of curl should be able to figure out what problems their particular curl versions and use cases are vulnerable to.

To help with this, we present this waterfall chart showing how all vulnerabilities affect which curl versions and we have this complete list of all known security problems since the birth of this project.

Backdoors and supply chain risks

With libcurl being installed and running in billions of installations all over the world and in countless different environments, we recognize that it is an ideal target for someone who wants a backdoor somewhere.

A new or old maintainer might at any point propose a change that sounds innocent and well-meaning but has a disguised malicious intent.

To mitigate such risks, we apply established procedures and techniques:

- GitHub. The curl project uses https://github.com/curl/curl as its main source code git repository since 2010. We rely on their hosting to secure access as per our configuration.

- 2FA required. We require all maintainers with push access to git to have two-factor authentication enabled, to reduce the risk that attackers can impersonate them and use their credentials to push source code changes. We rely on GitHub's 2FA setup.

- Reviews. Every contribution that are proposed for inclusion in the project is reviewed by a maintainer. All changes are always done publicly in the open to allow all interested parties to participate. No invitation needed. All changes are also automatically checked, tested and scanned by numerous tools before accepted.

- Readable code. We believe in readable code that follows our code style. Easy to read makes it easy to debug. If code is hard to read it should be improved until it gets easy to read. With easy to read code, smuggling malicious payloads or hiding nefarious functionality is excruciatingly hard.

- Tests. We have a large test suite that is always growing and we do not accept changes that break existing tests and new functionality need to bring new tests for the new functionality. We run several hundred thousand tests on each proposed change to make sure existing functionality remains unaffected. This includes fuzzers, static code analyzers, fault injectors and more.

- No binary blobs. All files stored in version control, in the git repository is readable or is otherwise small and documented. There is no place anywhere for any hidden encrypted payload. We run a scanner on all files on every change to detect binary files and the few files that need to remain looking binary are manually vetted and verified against a checksum.

- Reproducible builds. curl releases are shipped as tarballs that are hosted on the curl website (https://curl.se). We provide documentation, docker setups and configurations etc to allow anyone wanting to easily reproduce our release builds to generate identical images - proving that what we ship is only contents taken from the git repository plus other correct and properly generated contents.

- Signed commits. Over 90% - not all - of recent commits were signed to help prove provenance. Signing commits is not yet a mandatory requirement for committers but we hope to gradually increase the share over time and make it mandatory soon.

- Signed releases. Every release, every uploaded tarball, is signed by Daniel. This helps to prove that the files have not been tampered with since they were produced. We have opted to not sign them by multiple persons only because of the added complexity for the relatively small extra protection.

- Signed tags. Every release is generated from the exact state of the git tree where a corresponding signed tag is set. The name of the release tag is the same as the release version.

- Fix all vulnerabilities quickly. Whenever we receive a security vulnerability report, we create and ship a fix in the next pending release. Sometimes sooner than previously planned. Only in extremely rare cases does it take longer than a release cycle, but in the name of accuracy and correctness we do reserve the right to spend time on research to get it right. With every fixed security vulnerability we release a detailed description of the flaw including exact commit that introduced the problem, recommendations for users and more. Further, the security advisories get announced to the world.

Trust

For a software to conquer the world, it needs to be trusted. It takes trust to build more trust and it can all be broken down really fast if the foundation is proven to have cracks.

In the curl project we build trust for our users in a few different ways:

-

We are completely transparent about everything. Every decision, every discussion as well as every line of code and every considered code change are always public and done in the open.

-

We work hard to write reliable code. We write test cases, we review code, we document best practices and we have a style guide that helps us keep code consistent.

-

We stick to promises and guarantees as much as possible. We do not break APIs and we do not abandon support for old systems.

-

Security is of utmost importance and we take every reported incident seriously and realize that we must fix all known problems and we need to do it responsibly. We do our best to not endanger our users.

-

We act like adults. We can be silly and we can joke around, but we do it responsibly and we follow our Code of Conduct. Everyone should be able to even trust us to behave.

Code of Conduct

As contributors and maintainers of this project, we pledge to respect all people who contribute through reporting issues, posting feature requests, updating documentation, submitting pull requests or patches, and other activities.

We are committed to making participation in this project a harassment-free experience for everyone, regardless of level of experience, gender, gender identity and expression, sexual orientation, disability, personal appearance, body size, race, ethnicity, age, or religion.

Examples of unacceptable behavior by participants include the use of sexual language or imagery, derogatory comments or personal attacks, trolling, public or private harassment, insults, or other unprofessional conduct.

Project maintainers have the right and responsibility to remove, edit, or reject comments, commits, code, wiki edits, issues, and other contributions that are not aligned to this Code of Conduct. Project maintainers who do not follow the Code of Conduct may be removed from the project team.

This code of conduct applies both within project spaces and in public spaces when an individual is representing the project or its community.

Instances of abusive, harassing, or otherwise unacceptable behavior may be reported by opening an issue or contacting one or more of the project maintainers.

Development

We encourage everyone to participate in the development of curl and libcurl. We appreciate all the help we can get and while the main portion of this project is source code, there is a lot more than just coding and debugging help that is needed and useful.

We develop and discuss everything in the open, preferably on the mailing lists.

Source code on GitHub

The source code to curl and libcurl have also been provided and published publicly and it continues to be uploaded to the main web site for every release.

Since March 2010, the curl source code repository has been hosted on github.com. By being up to date with the changes there, you can follow our day to day development closely.

The development team

Daniel Stenberg is the founder and self-proclaimed leader of the project. Everybody else that participates or contributes in the project has thus arrived at a later point. Some contributors worked for a while and then left again. Most contributors hang around only for a short while to get their bug fixed or feature merged or similar. Counting all contributors we know the names of, we have received help from more than 3,300 individuals.

More than 1,300 individuals have authored commits that have been merged into the source code repository. Of course, many of those authors only ever contributed a single commit so far.

There is no formal membership or anything that needs to be done to join the project. If you participate in communication or development, you are part of the project. Every contributor decides for themselves exactly how much and in what ways to participate.

The full list of people who ever did ten commits or more within a single year in the project are in alphabetical order:

Alessandro Ghedini, Ben Greear, Benoit Neil, Bill Hoffman, Bill Nagel, Björn Stenberg, Brad Hards, Christian Schmitz, Dan Fandrich, Daniel Gustafsson, Daniel Stenberg, Dominick Meglio, Emanuele Torre, Emil Engler, Evgeny Grin, Fabian Frank, Fabian Keil, Gergely Nagy, Gisle Vanem, Guenter Knauf, Harry Sintonen, Isaac Boukris, Jacob Hoffman-Andrews, Jakub Zakrzewski, James Housley, Jan Venekamp, Jay Satiro, Jiri Hruska, Joe Mason, Johannes Schindelin, Josh Soref, Julien Chaffraix, Kamil Dudka, Marc Hoersken, Marcel Raad, Mark Salisbury, Marty Kuhrt, Max Dymond, Michael Kaufmann, Michael Osipov, Michal Marek, Michał Antoniak, Nicholas Nethercote, Nick Zitzmann, Nikos Mavrogiannopoulos, Orgad Shaneh, Patrick Monnerat, Peter Wu, Philip Heiduck, Rikard Falkeborn, Ruslan Baratov, Ryan Schmidt, Simon Warta, Stefan Eissing, Steinar H. Gunderson, Sterling Hughes, Steve Holme, Svyatoslav Mishyn, Tal Regev, Tatsuhiro Tsujikawa, Tor Arntsen, Viktor Szakats, Yang Tse

Users of curl

We estimate that there are more than twenty billion curl installations in the world. It makes a good line to say but in reality we, of course, do not have any numbers that exact. We just estimate and guess based on observations and trends. It also depends on exactly what we consider "an installation". Let's elaborate.

Open Source

The project being Open Source and liberally licensed means that just about anyone can redistribute curl in source format or built into binary form.

Counting downloads

The curl command-line tool and the libcurl library are available for download for most operating systems via the curl website, they are provided via third party installers to a bunch and they come installed by default with even more operating systems. This makes counting downloads from the curl website completely inappropriate as a means of measurement.

Finding users

So, we cannot count downloads and anyone may redistribute it and nobody is forced to tell us they use curl. How can we figure out the numbers? How can we figure out the users? The answer is that we really cannot with any decent level of accuracy.

Instead we rely on witness reports, circumstantial evidence, on findings on the Internet, the occasional "about box" or license agreement mentioning curl or that authors ask for help and tell us about their use.

The curl license says users need to repeat it somewhere, like in the documentation, but that is not easy for us to find in many cases and it is also not easy for us to do anything about should they decide not to follow the small license requirement.

Command-line tool users

The command-line tool curl is widely used by programmers around the world in shell and batch scripts, to debug servers and to test out things. There is no doubt it is used by millions every day.

Embedded library

libcurl is what makes our project reach a really large volume of users. The ability to quickly and easily get client side file transfer abilities into your application is desirable for a lot of users, and then libcurl's great portability also helps: you can write more or less the same application on a wide variety of platforms and you can still keep using libcurl for transfers.

libcurl being written in C with no or just a few required dependencies also help to get it used in embedded systems.

libcurl is popularly used in smartphone operating systems, in car infotainment setups, in television sets, in set-top boxes, in audio and video equipment such as Blu-Ray players and higher-end receivers. It is often used in home routers and printers.

A fair number of best-selling games are also using libcurl, on Windows and game consoles.

In website backends

The libcurl binding for PHP was one of, if not the, first bindings for libcurl to really catch on and get used widely. It quickly got adopted as a default way for PHP users to transfer data. PHP has now been in that position for over a decade and it has turned out to be a fairly popular technology on the Internet (recent numbers indicated that something like a quarter of all sites on the Internet uses PHP).

A few really high-demand sites are using PHP and are using libcurl in the backend. Facebook and Yahoo are two such sites.

Famous users

Nothing forces users to tell us they use curl or libcurl in their services or in their products. We usually only find out they do by accident, by reading about dialogues, documentation and license agreements. Of course some companies also just flat out tell us.

We used to collect names of companies and products on our website of users that use the project's products "in commercial environments". We did this mostly just to show-off to other big brands that if these other guys can build products that depend on us, maybe you can, too?

The list of companies contains hundreds of names, but extracting some of the larger or more well-known brands, here's a pretty good list that, of course, is only a small selection:

Adobe, Altera, AOL, Apple, AT&T, BBC, Blackberry, BMW, Bosch, Broadcom, Chevrolet, Cisco, Comcast, Facebook, Google, Hitachi, Honeywell, HP, Huawei, HTC, IBM, Intel, LG, Mazda, Mercedes-Benz, Microsoft, Motorola, NASA, Netflix, Nintendo, Oracle, Panasonic, Philips, Pioneer, RBS, Samsung, SanDisk, SAP, SAS Institute, SEB, Sharp, Siemens, Sony, Spotify, Sun, Swisscom, Tomtom, Toshiba, VMware, Xilinx, Yahoo, Yamaha.

Famous high volume apps using curl

The Google Youtube app, the Google Photos app, Spotify, Instagram, Skype (on Android), bundled with iOS, Grand Theft Auto V, Fortnite.

Future

There is no slowdown in sight in curl's future, bugs reported, development pace or how Internet protocols are being developed or updated.

We are looking forward to support for more protocols, support for more features within the already supported protocols, and more and better APIs for libcurl to allow users to do transfers even better and faster.

The project casually maintains a TODO file holding a bunch of ideas that we could work on in the future. It also keeps a KNOWN_BUGS document with a list of known problems we would like to fix.

There is a ROADMAP document that describes some plans for the short-term that some of the active developers thought they would work on next. Of course, we can not promise that we will always follow it.

We are highly dependent on developers to join in and work on what they want to get done, be it bug fixes or new features.

Network and protocols

Before diving in and talking about how to use curl to get things done, let's take a look at what all this networking is and how it works, using simplifications and some minor shortcuts to give an easy overview.

The basics are in the networking simplified chapter that tries to just draw a simple picture of what networking is from a curl perspective, and the protocols section which explains what exactly a "protocol" is and how that works.

Networking simplified

Networking means communicating between two endpoints on the Internet. The Internet is just a bunch of interconnected machines (computers really), each using its own individual addresses (called IP addresses). The addresses each machine has can be of different types and machines can even have temporary addresses. These computers are also called hosts.

Client and server

The computer, tablet or phone you sit in front of is usually called the client and the machine out there somewhere that you want to exchange data with is called the server. The main difference between the client and the server is in the roles they play. There is nothing that prevents the roles from being reversed in a subsequent operation.

A transfer initiative is always taken by the client, as the server cannot contact the client but the client can contact the server.

Which machine

When we as a client want to initiate a transfer from or to one of the machines out there (a server), we usually do not know its IP addresses but instead we usually know its name. The name of the machine to communicate with is typically embedded in the URL that we work with when we use tools like curl or a browser.

We might use a URL like http://example.com/index.html, which means the

client connects to and communicates with the host named example.com.

Hostname resolving

Once the client knows the hostname, it needs to figure out which IP addresses the host with that name has so that it can contact it.

Converting the name to an IP address is called 'name resolving'. The name is resolved to one or a set of addresses. This is usually done by a DNS server, DNS being like a big lookup table that can convert names to addresses—all the names on the Internet, really. The computer normally already knows the address of a computer that runs the DNS server as that is part of setting up the network.

The network client therefore asks the DNS server, Hello, please give me all

the addresses for example.com. The DNS server responds with a list of

addresses back. Or in case of spelling errors, it can answer back that the

name does not exist.

Establish a connection

With one or more IP addresses for the host the client wants to contact, it sends a connect request. The connection it wants to establish is called a TCP (Transmission Control Protocol) or QUIC connection, which is like connecting an invisible string between two computers. Once established, the string can be used to send a stream of data in both directions.

If the client has received more than one address for the host, it traverses that list of addresses when connecting, and if one address fails it tries to connect to the next one, repeating until either one address works or they have all failed.

Connect to port numbers

When connecting with TCP or QUIC to a remote server, a client selects which port number to do that on. A port number is just a dedicated place for a particular service, which allows that same server to listen to other services on other port numbers at the same time.

Most common protocols have default port numbers that clients and servers

use. For example, when using the http://example.com/index.html URL, that URL

specifies a scheme called HTTP which tells the client that it should try

TCP port number 80 on the server by default. If the URL uses HTTPS instead,

the default port number is 443.

The URL can include a custom port number. If a port number is not specified, the client uses the default port for the scheme used in the URL.

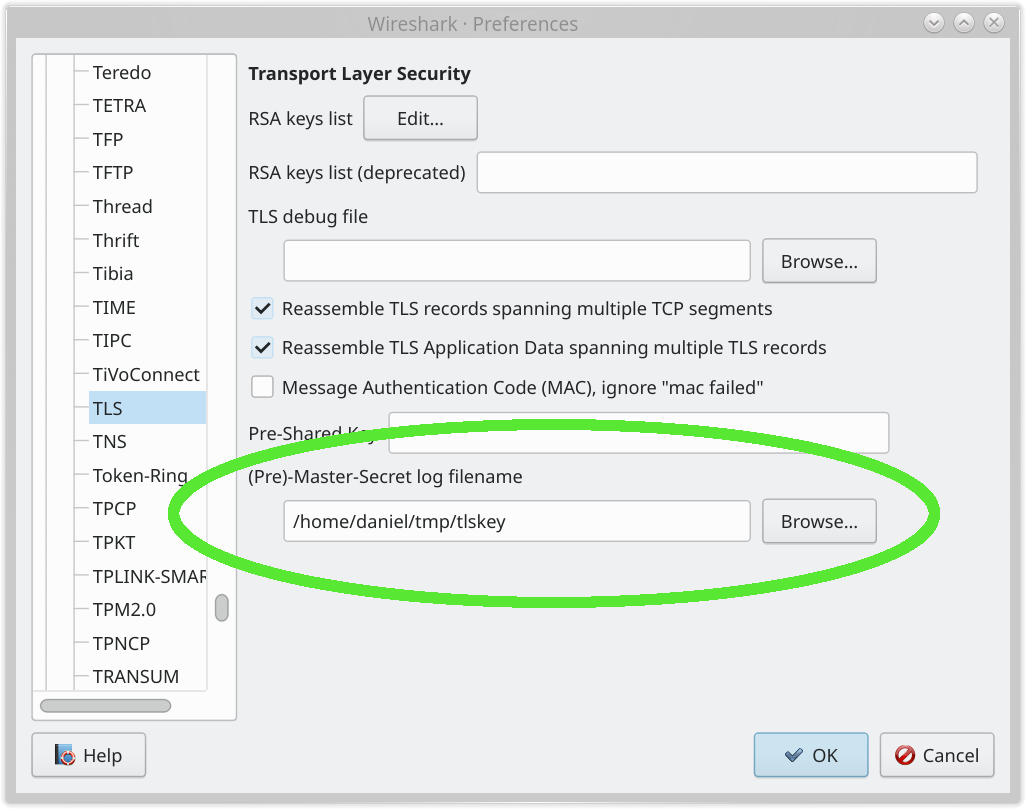

Security

After a TCP connection has been established, many transfers require that both

sides negotiate a better security level before continuing (if for example

HTTPS is used), which is done with TLS (Transport Layer

Security). If so, the

client and server do a TLS handshake first, and continue further only if that

succeeds.

If the connection is done using QUIC, the TLS handshake is done automatically in the connect phase.

Transfer data

When the connected metaphorical string is attached to the remote computer, there is a connection established between the two machines. This connection can then be used to exchange data. This exchange is done using a protocol, as discussed in the following chapter.

Traditionally, a download is when data is transferred from a server to a client; conversely, an upload is when data is sent from the client to the server. The client is down here; the server is up there.

Disconnect

When a single transfer is completed, the connection may have served its purpose. It can then either be reused for further transfers, or it can be disconnected and closed.

Protocols

The language used to ask for data to get sent—in either direction—is called the protocol. The protocol describes exactly how to ask the server for data, or to tell the server that there is data coming.

Protocols are typically defined by the IETF (Internet Engineering Task Force), which hosts RFC documents that describe exactly how each protocol works: how clients and servers are supposed to act and what to send and so on.

What protocols does curl support?

curl supports protocols that allow data transfers in either or both directions. We usually also restrict ourselves to protocols which have a URI format described in an RFC or at least is somewhat widely used, as curl works primarily with URLs (URIs really) as the input key that specifies the transfer.

The latest curl (as of this writing) supports these protocols:

DICT, FILE, FTP, FTPS, GOPHER, GOPHERS, HTTP, HTTPS, IMAP, IMAPS, LDAP, LDAPS, MQTT, POP3, POP3S, RTMP, RTSP, SCP, SFTP, SMB, SMBS, SMTP, SMTPS, TELNET, TFTP, WS, WSS

To complicate matters further, the protocols often exist in different versions or flavors as well.

What other protocols are there?

The world is full of protocols, both old and new. Old protocols get abandoned and dropped and new ones get introduced. There is never a state of stability but the situation changes from day to day and year to year. You can rest assured that there will be new protocols added in the list above in the future and that there will be new versions of the protocols already listed.

There are, of course, already other protocols in existence that curl does not yet support. We are open to supporting more protocols that suit the general curl paradigms, we just need developers to write the necessary code adjustments for them.

How are protocols developed?

Both new versions of existing protocols and entirely new protocols are usually developed by persons or teams that feel that the existing ones are not good enough. Something about them makes them not suitable for a particular use case or perhaps some new idea has popped up that could be applied to improve things.

Of course, nothing prevents anyone from developing a protocol entirely on their own at their own pleasure in their own backyard, but the major protocols are usually brought to the IETF at a fairly early stage where they are then discussed, refined, debated and polished and then eventually, ideally, turned into a published RFC document.

Software developers then read the RFC specifications and deploy their code in the world based on their interpretations of the words in those documents. It sometimes turns out that some of the specifications are subject to vastly different interpretations or sometimes the engineers are just lazy and ignore sound advice in the specs and deploy something that does not adhere. Writing software that interoperates with other implementations of the specifications can therefore end up being hard work.

How much do protocols change?

Like software, protocol specifications are frequently updated and new protocol versions are created.

Most protocols allow some level of extensibility which makes new extensions show up over time, extensions that make sense to support.

The interpretation of a protocol sometimes changes even if the spec remains the same.

The protocols mentioned in this chapter are all Application Protocols, which means they are transferred over more lower level protocols, like TCP, UDP and TLS. They are also themselves protocols that change over time, get new features and get attacked so that new ways of handling security, etc., forces curl to adapt and change.

About adhering to standards and who is right

Generally, there are protocol specs that tell us how to send and receive data for specific protocols. The protocol specs we follow are RFCs put together and published by IETF.

Some protocols are not properly documented in a final RFC, like, for example, SFTP for which our implementation is based on an Internet-draft that is not even the last available one.

Protocols are, however, spoken by two parties and like in any given conversation, there are then two sides of understanding something or interpreting the given instructions in a spec. Also, lots of network software is written without the authors paying close attention to the spec so they end up taking some shortcuts, or perhaps they just interpreted the text differently. Sometimes even mistakes and bugs make software behave in ways that are not mandated by the spec and sometimes even downright forbidden in the specs.

In the curl project we use the published specs as rules on how to act until we learn anything else. If popular alternative implementations act differently than what we think the spec says and that alternative behavior is what works widely on the big Internet, then chances are we change foot and instead decide to act like those others. If a server refuses to talk with us when we think we follow the spec but works fine when we bend the rules ever so slightly, then we probably end up bending them exactly that way—if we can still work successfully with other implementations.

Ultimately, it is a personal decision and up for discussion in every case where we think a spec and the real world do not align.

In the worst cases we introduce options to let application developers and curl users have the final say on what curl should do. I say worst because it is often really tough to ask users to make these decisions as it usually involves tricky details and weirdness going on and it is a lot to ask of users. We should always do our best to avoid pushing such protocol decisions to users.

curl protocols

curl supports about 28 protocols. We say about because it depends on how you count and what you consider to be distinctly different protocols.

DICT

DICT is a dictionary network protocol, it allows clients to ask dictionary servers about a meaning or explanation for words. See RFC 2229. Dict servers and clients use TCP port 2628.

FILE

FILE is not actually a network protocol. It is a URL scheme that allows you to tell curl to get a file from the local file system instead of getting it over the network from a remote server. See RFC 1738.

FTP

FTP stands for File Transfer Protocol and is an old (originates in the early 1970s) way to transfer files back and forth between a client and a server. See RFC 959. It has been extended greatly over the years. FTP servers and clients use TCP port 21 plus one more port, though the second one is usually dynamically established during communication.

See the external page FTP vs HTTP for how it differs from HTTP.

FTPS

FTPS stands for Secure File Transfer Protocol. It follows the tradition of appending an 'S' to the protocol name to signify that the protocol is done like normal FTP but with an added SSL/TLS security layer. See RFC 4217.

This protocol is problematic to use through firewalls and other network equipment.

GOPHER

Designed for "distributing, searching, and retrieving documents over the Internet", Gopher is somewhat of the grandfather to HTTP as HTTP has mostly taken over completely for the same use cases. See RFC 1436. Gopher servers and clients use TCP port 70.

GOPHERS

Gopher over TLS. A recent extension to the old protocol.

HTTP

The Hypertext Transfer Protocol, HTTP, is the most widely used protocol for transferring data on the web and over the Internet. See RFC 9110 for general HTTP Semantics, RFC 9112 for HTTP/1.1, RFC 9113 for HTTP/2 and RFC 9114 for HTTP/3. HTTP servers and clients use TCP port 80.

HTTPS

Secure HTTP is HTTP done over an SSL/TLS connection. See RFC 2818. HTTPS servers and clients use TCP port 443, unless they speak HTTP/3 which then uses QUIC (RFC 8999) and is done over UDP.

IMAP

The Internet Message Access Protocol, IMAP, is a protocol for accessing,

controlling and "reading" email. See RFC 3501. IMAP servers and clients use

TCP port 143. Whilst connections to the server start out as cleartext, SSL/TLS

communication may be supported by the client explicitly requesting to upgrade

the connection using the STARTTLS command. See RFC 2595.

IMAPS

Secure IMAP is IMAP done over an SSL/TLS connection. Such connections implicitly start out using SSL/TLS and as such servers and clients use TCP port 993 to communicate with each other. See RFC 8314.

LDAP

The Lightweight Directory Access Protocol, LDAP, is a protocol for accessing and maintaining distributed directory information. Basically a database lookup. See RFC 4511. LDAP servers and clients use TCP port 389.

LDAPS

Secure LDAP is LDAP done over an SSL/TLS connection.

MQTT

Message Queuing Telemetry Transport, MQTT, is a protocol commonly used in IoT systems for interchanging data mostly involving smaller devices. It is a so-called "publish-subscribe" protocol.

POP3

The Post Office Protocol version 3 (POP3) is a protocol for retrieving email

from a server. See RFC 1939. POP3 servers and clients use TCP port 110. Whilst

connections to the server start out as cleartext, SSL/TLS communication may be

supported by the client explicitly requesting to upgrade the connection using

the STLS command. See RFC 2595.

POP3S

Secure POP3 is POP3 done over an SSL/TLS connection. Such connections implicitly start out using SSL/TLS and as such servers and clients use TCP port 995 to communicate with each other. See RFC 8314.

RTMP

The Real-Time Messaging Protocol (RTMP) is a protocol for streaming audio, video and data. RTMP servers and clients use TCP port 1935.

RTSP

The Real Time Streaming Protocol (RTSP) is a network control protocol to control streaming media servers. See RFC 2326. RTSP servers and clients use TCP and UDP port 554.

SCP

The Secure Copy (SCP) protocol is designed to copy files to and from a remote SSH server. SCP servers and clients use TCP port 22.

SFTP

The SSH File Transfer Protocol (SFTP) that provides file access, file transfer, and file management over a reliable data stream. SFTP servers and clients use TCP port 22.

SMB

The Server Message Block (SMB) protocol is also known as CIFS. It is an application-layer network protocol mainly used for providing shared access to files, printers, and serial ports and miscellaneous communications between nodes on a network. SMB servers and clients use TCP port 445.

SMBS

SMB done over TLS.

SMTP

The Simple Mail Transfer Protocol (SMTP) is a protocol for email

transmission. See RFC 5321. SMTP servers and clients use TCP port 25. Whilst

connections to the server start out as cleartext, SSL/TLS communication may be

supported by the client explicitly requesting to upgrade the connection using

the STARTTLS command. See RFC 3207.

SMTPS

Secure SMTP, is SMTP done over an SSL/TLS connection. Such connections implicitly start out using SSL/TLS and as such servers and clients use TCP port 465 to communicate with each other. See RFC 8314.

TELNET

TELNET is an application layer protocol used over networks to provide a bidirectional interactive text-oriented communication facility using a virtual terminal connection. See RFC 854. TELNET servers and clients use TCP port 23.

TFTP

The Trivial File Transfer Protocol (TFTP) is a protocol for doing simple file transfers over UDP to get a file from or put a file onto a remote host. TFTP servers and clients use UDP port 69.

WS

WebSocket is a bidirectional TCP-like protocol, setup over an HTTP(S) request. WS is the scheme for the clear text version done over plain HTTP.

WSS

WebSocket is a bidirectional TCP-like protocol, setup over an HTTP(S) request. WSS is the scheme for the secure version done over HTTPS.

HTTP basics

HTTP is a protocol that is easy to learn the basics of. A client connects to a server—and it is always the client that takes the initiative—sends a request and receives a response. Both the request and the response consist of headers and a body. There can be little or a lot of information going in both directions.

An HTTP request sent by a client starts with a request line, followed by headers and then optionally a body. The most common HTTP request is probably the GET request which asks the server to return a specific resource, and this request does not contain a body.

When a client connects to 'example.com' and asks for the '/' resource, it sends a GET without a request body:

GET / HTTP/1.1

User-agent: curl/2000

Host: example.com

…the server could respond with something like below, with response headers and a response body ('hello'). The first line in the response also contains the response code and the specific version the server supports:

HTTP/1.1 200 OK

Server: example-server/1.1

Content-Length: 5

Content-Type: plain/text

hello

If the client would instead send a request with a small request body ('hello'), it could look like this:

POST / HTTP/1.1

Host: example.com

User-agent: curl/2000

Content-Length: 5

hello

A server always responds to an HTTP request unless something is wrong.

The URL converted to a request

When an HTTP client is given a URL to operate on, that URL is then used, picked apart and those parts are used in various places in the outgoing request to the server. Let's take an example URL:

https://www.example.com/path/to/file

-

https means that curl uses TLS to the remote port 443 (which is the default port number when no specified is used in the URL).

-

www.example.com is the hostname that curl resolves to one or more IP addresses to connect to. This hostname is also used in the HTTP request in the

Host:header. -

/path/to/file is used in the HTTP request to tell the server which exact document/resources curl wants to fetch

Install curl and libcurl

curl is totally free, open and available. There are numerous ways to get it and install it for most operating systems and architecture. This section gives you some answers to start with, but is not a complete reference.

Some operating systems ship curl by default. Some do not.

In addition, You can always download the source from curl.se or find binary packages to download from there.

Commonly, libcurl is installed at the same time as curl.

Linux

Linux distributions come with packager managers that let you install software that they offer. Most Linux distributions offer curl and libcurl to be installed if they are not installed by default.

Ubuntu and Debian

apt is a tool to install prebuilt packages on Debian Linux and Ubuntu Linux

distributions and derivatives.

To install the curl command-line tool, you usually just

apt install curl

…and that then makes sure the dependencies are installed and usually libcurl is then also installed as an individual package.

If you want to build applications against libcurl, you need a development package installed to get the include headers and some additional documentation, etc. You can then select a libcurl with the TLS backend you prefer:

apt install libcurl4-openssl-dev

or

apt install libcurl4-gnutls-dev

Redhat and CentOS

With Redhat Linux and CentOS Linux derivatives, you use yum to install

packages. Install the command-line tool with:

yum install curl

You install the libcurl development package (with include files and some docs, etc.) with this:

yum install libcurl-devel

Fedora

Fedora Workstation and other Fedora based distributions use dnf to install packages.

Install the command-line tool with:

dnf install curl

To install the libcurl development package you run:

dnf install libcurl-devel

Immutable Fedora distributions

Distributions such as Silverblue, Kinoite, Sericea, Onyx, ...

use rpm-ostree to install packages.

Remember to restart the system after install.

rpm-ostree install curl

To install the libcurl development package you run:

rpm-ostree install libcurl-devel

nix

Nix is a package manager default to the NixOS distribution, but it can also be used on any Linux distribution.

In order to install command-line tool:

nix-env -i curl

Arch Linux

curl is located in the core repository of Arch Linux. This means it should be installed automatically if you follow the normal installation procedure.

If curl is not installed, Arch Linux uses pacman to install packages:

pacman -S curl

SUSE and openSUSE

With SUSE Linux and openSUSE Linux you use zypper to install

packages. To install the curl command-line utility:

zypper install curl

In order to install the libcurl development package you run:

zypper install libcurl-devel

SUSE SLE Micro and openSUSE MicroOS

These versions of SUSE/openSUSE Linux are immutable OSes and have a read

only root file system, to install packages you would use transactional-update

instead of zypper. To install the curl command-line utility:

transactional-update pkg install curl

To install the libcurl development package:

transactional-update pkg install libcurl-devel

Gentoo

This package installs the tool, libcurl, headers and pkg-config files etc

emerge net-misc/curl

Void Linux

With Void Linux you use xbps-install to install packages.

To install the curl command-line utility:

xbps-install curl

In order to install the libcurl development package:

xbps-install libcurl-devel

Windows

Windows 10 comes with the curl tool bundled with the operating system since version 1804. If you have an older Windows version or just want to upgrade to the latest version shipped by the curl project, download the latest official curl release for Windows from curl.se/windows and install that.

There are several different ways to get curl and libcurl onto your Windows systems:

MSYS2

MSYS2 is a popular build system for Windows based on mingw-w64 and includes both gcc and clang compilers. MSYS2 uses a package manager named pacman (a port from arch-linux) and has about 2000 precompiled mingw-packages. MSYS2 is designed to build standalone software: the binaries built with mingw-w64 compilers do not depend on MSYS2 itself[^1].

Get curl and libcurl on MSYS2

Current information about the mingw-w64-curl package can be found on the msys2 website: https://packages.msys2.org/base/mingw-w64-curl. Here we can also find installation instructions for the various available flavors. For example to install the default x64 binary for curl we run:

pacman -Sy mingw-w64-x86_64-curl

This package contains both the curl command line tool as well as libcurl headers and shared libraries. The default curl packages are built with the OpenSSL backend and hence depend on mingw-w64-x86_64-openssl. There are also mingw-w64-x86_64-curl-gnutls and mingw-w64-x86_64-curl-gnutls packages, refer to the msys2 website for more details.

Just like on Linux, we can use pkg-config to query the flags needed to build against libcurl. Start msys2 using the mingw64 shell (which automatically sets the path to include /mingw64) and run:

pkg-config --cflags libcurl

# -IC:/msys64/mingw64/include

pkg-config --libs libcurl

# -LC:/msys64/mingw64/lib -lcurl

The pacman package manager installs precompiled binaries. Next up we explain how to use pacman to build curl locally, for example to customize the configuration.

Building libcurl on MSYS2

Building packages with pacman is almost just as simple as installing. The entire process is contained in the PKGBUILD file from the mingw-w64-curl package. We can easily modify the file to rebuild the package ourselves.

If we start with a clean msys2 installation, we first want to install some build tools, like autoconf, patch and git. Start the msys2 shell and run:

# Sync the repositories

pacman -Syu

# Install git, autoconf, patch, etc

pacman -S git base-devel

# Install GCC for x86_64

pacman -S mingw-w64-x86_64-toolchain

Now clone the mingw-packages repository and go to the mingw-w64-curl package:

git clone https://github.com/msys2/MINGW-packages

cd MINGW-packages/mingw-w64-curl

This directory contains the PKGBUILD file and patches that are used for building curl. Have a look at the PKGBUILD file to see what is going on. Now to compile it, we can do:

makepkg-mingw --syncdeps --skippgpcheck

That is it. The --syncdeps parameter automatically checks and prompts to

install dependencies of mingw-w64-curl if these are not yet installed. Once

the process is complete you have 3 new files in the current directory, for

example:

pacman -U mingw-w64-x86_64-curl-7.80.0-1-any.pkg.tar.zstpacman -U mingw-w64-x86_64-curl-gnutls-7.80.0-1-any.pkg.tar.zstpacman -U mingw-w64-x86_64-curl-winssl-7.80.0-1-any.pkg.tar.zst

Use the pacman -u command to install such a local package file:

pacman -U mingw-w64-x86_64-curl-winssl-7.80.0-1-any.pkg.tar.zst

Have a look at the msys2 docs or join the gitter to learn more about building with pacman and msys2.

[^1]: Be careful not to confuse the mingw-package mingw-w64-curl with the msys-packages curl and curl-devel. The latter are part of msys2 environment itself (e.g. to support pacman downloads), but not suitable for redistribution. To build redistributable software that does not depend on MSYS2 itself, you always need mingw-w64-… packages and toolchains.

vcpkg

Vcpkg helps you manage C and C++ libraries on Windows, Linux and MacOS.

There is no curl package on vcpkg, only libcurl.

Install libcurl

vcpkg.exe install curl:x64-windows

macOS

macOS comes with the curl tool bundled with the operating system for many years. If you want to upgrade to the latest version shipped by the curl project, we recommend installing homebrew (a macOS software package manager) and then install the curl package from them:

brew install curl

Note that when installing curl, brew does not create a curl symlink

in the default homebrew folder, to avoid clashes with the macOS version of curl.

Run the following to make brew curl the default one in your shell:

echo 'export PATH="$(brew --prefix)/opt/curl/bin:$PATH"' >> ~/.zshrc

source ~/.zshrc

Get libcurl for macOS

When you install curl the tool with homebrew as described above, it also

installs libcurl together with its associated headers.

libcurl is also installed with macOS itself and always present, and if you

install the development environment XCode from Apple, you can use libcurl

directly without having to install anything extra as the curl include files

are bundled there.

Container

Both docker and podman are containerization tools. The docker image is

hosted at

https://hub.docker.com/r/curlimages/curl

You can run the latest version of curl with the following command:

Command for docker:

docker run -it --rm docker.io/curlimages/curl www.example.com

Command for podman:

podman run -it --rm docker.io/curlimages/curl www.example.com

Running curl seamlessly in container

It is possible to make an alias to seamlessly run curl inside a container as if it is a native application installed on the host OS.

Command to define curl as an alias for your containerization tool in the Bash, ZSH, Fish shell:

Bash or zsh

Invoke curl with docker:

alias curl='docker run -it --rm docker.io/curlimages/curl'

Invoke curl with podman:

alias curl='podman run -it --rm docker.io/curlimages/curl'

Fish

Invoke curl with docker:

alias -s curl='docker run -it --rm docker.io/curlimages/curl'

Invoke curl with podman:

alias -s curl='podman run -it --rm docker.io/curlimages/curl'

Simply invoke curl www.example.com to make a request

Running curl in kubernetes

Sometimes it can be useful to troubleshoot k8s networking with curl, just like :

kubectl run -i --tty curl --image=curlimages/curl --restart=Never \

-- "-m 5" www.example.com

Source code

The source code is, of course, the actual engine parts of this project. After all, it is a software project.

curl and libcurl are written in C.

Hosting and download

You can always find the source code for the latest curl and libcurl release on the official curl website curl.se. There are also checksums and digital signatures provided to help you verify that what ends up on your local system when you download the files, are the same bytes in the same order as were originally uploaded there by the curl team.

If you would rather work directly with the curl source code off the source code repository, you find all details in the curl GitHub repository.

Clone the code

git clone https://github.com/curl/curl.git

This gets the latest curl code downloaded and unpacked in a directory on your local system.

- Open Source

- Code layout

- Handling build options

- Code style

- Contributing

- Reporting vulnerabilities

- Website

Open Source

What is Open Source

Generally, Open Source software is software that can be freely accessed, used, changed, and shared (in modified or unmodified form) by anyone. Open Source software is typically made by many people, and distributed under licenses that comply with the definition.

Free Software is an older and related term that mostly says the same thing for all our intents and purposes, but we stick to the term Open Source in this document for simplicity.

License

curl and libcurl are distributed under an Open Source license known as a MIT license derivative. It is short, simple and easy to grasp. It follows here in full:

COPYRIGHT AND PERMISSION NOTICE

Copyright © 1996 - 2025, Daniel Stenberg, <daniel@haxx.se>, and many

contributors, see the THANKS file.

All rights reserved.

Permission to use, copy, modify, and distribute this software for any

purpose with or without fee is hereby granted, provided that the above

copyright notice and this permission notice appear in all copies.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS

OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF

MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OF

THIRD PARTY RIGHTS. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS

BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN

ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN

CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

SOFTWARE.

Except as contained in this notice, the name of a copyright holder shall

not be used in advertising or otherwise to promote the sale, use or

other dealings in this Software without prior written authorization of

the copyright holder.

This is legalese that says you are allowed to change the code, redistribute the code, redistribute binaries built from the code and build proprietary code with it, without anyone requiring you to give any changes back to the project—but you may not claim that you wrote it.

Early on in the project we iterated over a few different other licenses before we settled on this. We started out GPL, then tried MPL and landed on this MIT derivative. We do not intend to ever change the license again.

Copyright

Copyright is a legal right granted by the law of a country that gives the creator of an original work exclusive rights for its use and distribution.

The copyright owner(s) can agree to allow others to use their work by licensing it. That is what we do in the curl project. The copyright is the foundation on which the licensing works.

Daniel Stenberg is the owner of most copyrights in the curl project.

Independent

A lot of Open Source projects are run within umbrella organizations. Such organizations include the GNU project, the Apache Software Foundation, a larger company that funds the project or similar. The curl project is not part of any such larger organization but is completely independent and free.

No company controls curl's destiny and the curl project does not need to follow any umbrella organization's guidelines.

curl is not a formal company, organization or a legal entity of any kind. curl is just an informal collection of humans, distributed across the globe, who work together on a software project.

Legal

The curl project obeys national laws of the countries in which it works. However, it is a highly visible international project, downloadable and usable in effectively every country on earth, so some local laws could be broken when using curl. That is just the nature of it and if uncertain, you should check your own local situation.